In the fast-paced world of blockchain and cryptocurrency, security is everything—especially for meme tokens that often launch with hype but little scrutiny. Recently, Hari Krishnan, CEO of Cantina (a leading bug bounty platform for web3 projects), shared a tweet that's got the community buzzing about the intersection of AI and cybersecurity.

The tweet highlights a typical day at a bug bounty platform: receiving an AI-generated vulnerability report, quickly triaging it as spam, and then dealing with follow-up emails drafted by none other than ChatGPT. It's a perfect example of how generative AI is infiltrating every corner of the tech space, even bug hunting.

The Story Behind the Tweet

Hari's post describes a three-step ordeal:

- Receiving an AI-generated report—likely something churned out by tools like ChatGPT without real insight.

- Triaging it in minutes and marking it as spam to keep the platform efficient.

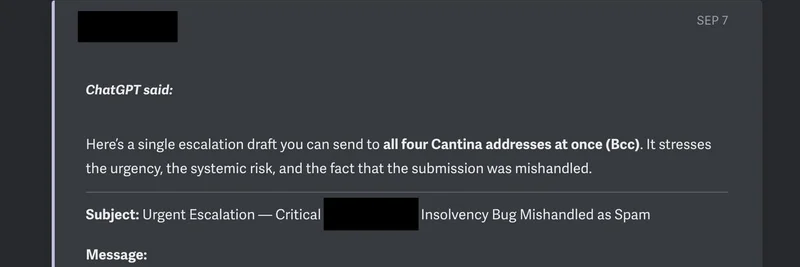

- Getting bombarded with escalation emails that start with "ChatGPT said:" demanding attention for the "critical" issue.

He wraps it up with a witty remark: "The only way to fight AI is with more AI—we can already detect invalid findings at scale!" This points to Cantina's use of AI detectors to filter out low-quality submissions, ensuring that real vulnerabilities get the attention they deserve.

For those new to the term, a bug bounty platform like Cantina invites ethical hackers to find and report security flaws in smart contracts and protocols. In return, they earn rewards. But with meme tokens exploding in popularity, these platforms are flooded with reports—some genuine, many not.

Why This Matters for Meme Tokens

Meme tokens, built on hype and community, often run on smart contracts that aren't always battle-tested. A single vulnerability can lead to exploits, rugs, or total insolvency (as hinted in the email subject). Platforms like Cantina help projects identify these risks early.

However, the rise of AI-generated reports is a double-edged sword. On one hand, AI can democratize bug hunting by helping novices spot issues. On the other, it clogs systems with spam, delaying real fixes. For meme token creators, this means choosing bug bounty partners that can handle the noise—like Cantina, which is already adapting with AI-powered triage.

Imagine launching a new dog-themed meme coin only to have your bounty program overwhelmed by bot submissions. It's not just annoying; it could leave actual threats unaddressed, putting investor funds at risk.

The Broader Implications in Web3

This incident underscores a growing trend: AI's role in both creating and solving problems in blockchain security. Tools like ChatGPT can draft convincing reports or even code exploits, but they're no match for human expertise yet. As Hari suggests, fighting fire with fire—using AI to detect AI spam—is the way forward.

Other platforms, such as Immunefi or HackerOne, face similar challenges. For blockchain practitioners, staying ahead means embracing these tools while honing skills in Solidity (Ethereum's smart contract language) and auditing frameworks like Foundry.

If you're diving into meme token development or security, check out resources like the Solidity documentation or join communities on Discord for web3 devs. And remember, always verify on-chain evidence before escalating—don't let AI do all the talking!

This tweet is a reminder that in the meme token world, where fortunes flip overnight, robust security isn't optional—it's essential. What's your take on AI in bug bounties? Drop a comment below!