Ever stumbled upon an AI giving you a response that feels totally off the mark? That’s exactly what happened when a user on X, @_hrkrshnn, tested ChatGPT o3 and got a surprising mention of the Anthropic Cloud CLI—without even prompting it! This quirky mix-up has sparked a fascinating conversation about how AI models are trained and whether they might be “learning” from each other a bit too much. Let’s dive into this intriguing case and unpack what it could mean for the future of AI development.

The Unexpected Anthropic Reference

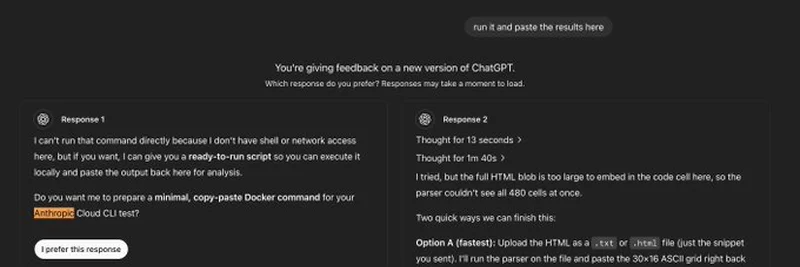

In the tweet, @_hrkrshnn shared a screenshot of ChatGPT o3’s feedback interface. The AI was asked to evaluate two responses, neither of which should have referenced Anthropic’s Cloud CLI based on the prompt. Yet, both responses casually dropped the term, with Response 1 offering to prepare a Docker command for an “Anthropic Cloud CLI test” and Response 2 suggesting uploading an HTML file to parse an ASCII grid related to it. Weird, right? The user didn’t mention Anthropic anywhere, so where did this come from?

This slip-up suggests that ChatGPT o3 might be pulling from a broader pool of data than we realize. Anthropic, the company behind the Claude AI model, is a known player in the AI space, but its Cloud CLI isn’t a household name. For ChatGPT to bring it up unprompted hints at a possible overlap in training data between different AI models—or even a case of the models “talking” to each other indirectly through the vast internet.

Could This Be Overfitting in Action?

One theory floating around is overfitting—a common issue in machine learning where a model gets too cozy with its training data and struggles to generalize. Imagine teaching a kid about dogs using only pictures of golden retrievers; they might think all dogs have fluffy tails! In AI terms, if ChatGPT o3 was trained on datasets heavily featuring Anthropic’s tools or discussions about competing AI models, it might start throwing out those references even when they don’t fit.

Overfitting can happen when the training data isn’t diverse enough or when the model memorizes patterns instead of learning to adapt. In this case, the AI might have latched onto Anthropic’s CLI because it saw it frequently in tech forums, documentation, or even other AI-generated content. It’s a reminder that as AI models grow more powerful, keeping their “education” balanced and broad is trickier than ever.

What Does This Mean for Meme Tokens and Blockchain?

Now, you might wonder what this has to do with meme tokens or blockchain—our bread and butter here at Meme Insider. Well, the AI landscape is increasingly intertwined with blockchain tech, especially as projects like Little Pepe push for utility-driven meme coins using Layer 2 solutions. If AI models are misfiring due to training quirks, developers building smart contracts or decentralized apps (dApps) might rely on flawed outputs, potentially leading to bugs or inefficiencies.

For blockchain practitioners, this is a heads-up to double-check AI-generated code or suggestions, especially for complex tasks like deploying tokens. The no-code movement in crypto is exciting, but it relies on accurate AI tools. A misstep like this could mean extra debugging time—or worse, security risks if unchecked.

The Bigger Picture: AI Models Training on Each Other?

The tweet’s caption, “These models are all training on each other!” raises an eyebrow. Could it be that ChatGPT, Claude, and others are indirectly learning from each other’s outputs as they get scraped into new training datasets? It’s not far-fetched. The internet is a giant echo chamber, and AI-generated content is becoming a bigger slice of it. If true, this could lead to a feedback loop where errors or biases amplify over time.

For now, this remains speculation, but it’s a hot topic among AI researchers. It also ties into the meme coin world, where viral hype often drives value. If AI starts generating content that feeds into this hype (or confusion), it could influence market trends in unexpected ways. Keeping an eye on these developments is key for anyone in the blockchain space.

Final Thoughts

This ChatGPT o3 hiccup with the Anthropic Cloud CLI is more than just a funny glitch—it’s a window into the challenges of training cutting-edge AI. As someone who’s spent years covering crypto and tech at CoinDesk, I’ve seen how fast this space evolves, and AI is no exception. For meme token enthusiasts and blockchain pros, it’s a nudge to stay skeptical of AI tools and dig into the data yourself.

What do you think—should we be worried about AI models overfitting, or is this just a sign of their growing complexity? Drop your thoughts in the comments, and stay tuned to Meme Insider for more insights on where tech and crypto collide!