Hey folks, if you're knee-deep in the wild world of crypto mining or AI compute, you've probably wondered: how long do these pricey GPUs really last before they're toast? Everyone's throwing around numbers—3 years on the low end, maybe 7 if you're optimistic—but what's the real story? Turns out, a fresh thread from Meltem Demirors on X is spilling the beans with hard data from Aravolta, and it's a wake-up call for anyone financing hardware in the blockchain space.

Meltem, a thermodynamics enthusiast and backer at Crucible Capital, kicked things off with a bang: "One of the most important questions in GPU financing is the rate of depreciation." She's spot on. In our meme token hunts and DeFi plays, we often overlook the hardware humming in the background. But as GPU fleets scale up for mining, training meme-inspired AI models, or powering decentralized compute networks, getting this wrong can tank your returns faster than a rug pull.

The Modeling Myth: What We Thought vs. What We Got

Investors and operators have been modeling GPU lifespans all over the map. Conservative folks pencil in 3 years of useful life, while the bulls stretch it to 7. It's all based on assumptions about "occasional" spikes in usage, smooth thermal envelopes, and routine maintenance. But assumptions are like that one viral meme coin—fun until they flop.

Enter Aravolta's telemetry data, pulled straight from the GPU level across real-world deployments. They tracked multiple cohorts of GPUs, zeroing in on operational gremlins like thermal stress (those heat spikes that make fans scream), power stress (when you're juicing every watt for a mining burst), and workload intensity (think constant max-out runs for blockchain validation or model training).

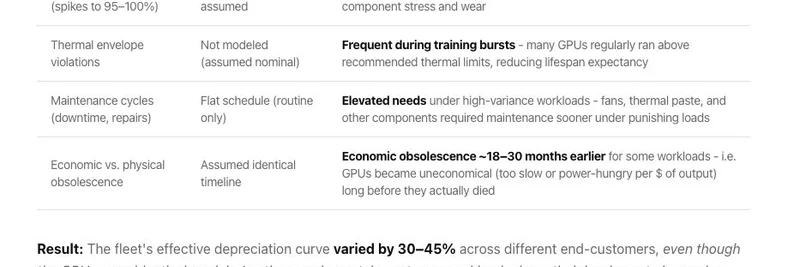

The results? Brutal. Here's a quick breakdown of expected vs. actual impacts, straight from the data Meltem shared:

Workload Intensity: We figured spikes to 95-100% usage were rare "occasional" events. Nope. They're happening daily, hammering components with frequent max utilization. That accelerated stress and wear? It's shortening hardware life way quicker than planned.

Thermal Envelope Violations: Assumed these were non-issues (modeled as normal). Reality: Frequent during training bursts, with many GPUs routinely blasting past recommended thermal limits. Cue reduced lifespan expectancy—fans and pastes wearing out under high-variance loads.

Maintenance Cycles (Downtime, Repairs): Thought it'd be a flat, routine schedule. Wrong again. Elevated needs hit sooner, with components demanding high-variance workloads, fans, thermal paste, and punishing loads leading to earlier interventions.

Economic vs. Physical Obsolescence: We banked on identical timelines. But get this: GPUs are becoming economically obsolete 18-30 months earlier for some workloads. Why? Too slow or power-hungry per dollar of output, even before physical failure kicks in.

In plain English? The fleet's effective depreciation curve varies by a whopping 30-45% across different end-customers, even on identical GPU models. Certain workloads—like those intense crypto mining sessions or AI fine-tuning for the next big meme narrative—drive hardware to lose value almost half again as fast as others.

A Stark Perspective: From 5 Years to 3.7 (And Counting)

To hammer it home, one cohort of GPUs expected to chug along for 5+ years of useful life? Under real use, they're trending toward just 3.7 years. That's a nearly two-year gap between effective life and expectation. And the ripple effects? Massive.

Think cascading hits to salvage value (goodbye, resale dreams), loan terms (shorter amortizations mean higher costs), covenant triggers (lenders get twitchy), and the overall debt-vs-equity mix in your portfolio. In the trillion-dollar (and exploding) GPU financing economy, this isn't trivia—it's table stakes.

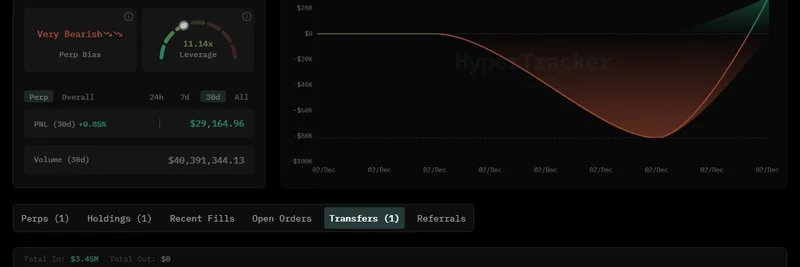

Meltem's thread doesn't stop at the doomscroll. She's bullish on solutions: "We'll be using Aravolta's telemetry-based, asset-level monitoring on our own GPU deployment to actively price and manage residual value risk." (Full disclosure from her: Crucible Cap is an investor in Aravolta.) Imagine dynamic utilization pricing—charge more for those heavy-load leases—or hedging depreciation like you would volatility in a meme token pump.

Echoing the vibe, Solana co-founder Anatoly Yakovenko chimed in: "Faster depreciation is actually bullish. It means technology and demand are redlining." Spot on. In blockchain, where compute powers everything from proof-of-stake validators to NFT generators, this accelerated cycle signals roaring demand. But it also screams for smarter tools.

Why This Matters for Meme Token Hunters and Blockchain Builders

At Meme Insider, we're all about demystifying the tech behind the tokens. Sure, that dog-themed coin might moon on hype, but sustainable projects need robust hardware fleets. This data underscores why telemetry monitoring isn't optional—it's essential for pricing risks in GPU-backed mining ops or decentralized AI networks.

If you're a practitioner eyeing GPU investments, check out Aravolta's full blog post for the deep dive. It's packed with modeling insights that could save you from overpaying on leases or underselling salvage.

What's your take? Are you seeing faster GPU churn in your setups? Drop a comment below—we're building this knowledge base together. And hey, if dynamic hedging sounds like the next big thing, keep an eye on firms like Crucible Cap. The future of crypto compute just got a whole lot more precise.