Imagine strapping on a pair of sleek Ray-Ban shades that don't just look cool—they whisper the world's conversations directly into your field of view. That's the magic Meta dropped at a recent TechCrunch event, as captured in this viral tweet from VaderResearch. The star of the show? Real-time subtitles beaming speech-to-text translations straight onto the lenses, turning everyday chit-chat into an accessible, multilingual spectacle.

In the demo video, a curly-haired presenter (rocking those futuristic frames) chats casually on stage, his words popping up as crisp overlays in real time. "When I watch TV, I pretty much always have the subtitles on," he says, proving the tech's low-latency chops with a quick glitch and recovery. No awkward pauses—just seamless text floating above the action, making it easier to follow along whether you're hard of hearing or just zoning out during a noisy conference call. And the kicker? It handles translations too. Picture negotiating a deal in Tokyo while seeing English subtitles in your peripheral vision, or catching up on a French podcast without missing a beat.

This isn't some clunky headset—it's embedded right in the Ray-Ban Display glasses, blending style with smarts. Meta's pushing boundaries here, evolving their AR lineup from basic notifications to full-on contextual aids. For the uninitiated, AR (augmented reality) layers digital info over the real world, like Pokémon GO but for productivity. These glasses use onboard mics and AI to transcribe audio live, displaying it discreetly so you stay engaged without pulling out your phone.

But let's zoom out to why this matters for us in the blockchain trenches. At Meme Insider, we're all about spotting where cutting-edge tech meets the wild world of meme tokens and decentralized dreams. This AR leap screams opportunity for Web3. Think about it: global crypto communities thrive on inclusivity, but language barriers can stifle that vibe. Real-time translations could make DAOs borderless, letting a dev in Seoul collaborate seamlessly with a trader in São Paulo during a live Spaces session. No more Google Translate fumbles—just instant, glasses-powered clarity.

And memes? Oh, the possibilities. Imagine AR overlays turning your morning commute into a meme battlefield, with blockchain-verified tokens popping up as Easter eggs in the real world. Projects like those hinted in the tweet's replies—"See to Earn" models where you rack up rewards for spotting branded content through your lenses—could explode. VaderResearch even asked, "Who is building this?" We're eyeing protocols like Zama's FHE for privacy-preserving AR data (shoutout to @iwantanode's reply), ensuring your eye-tracking doesn't leak to the highest bidder.

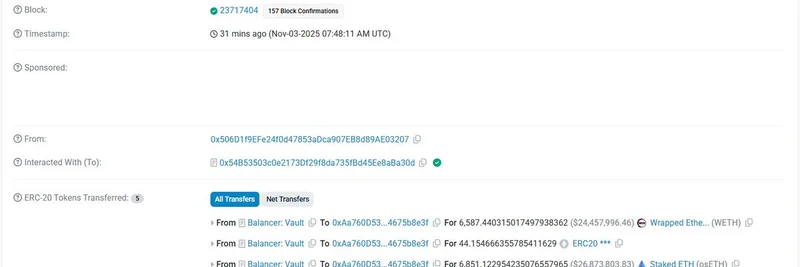

Of course, it's not all utopian. Privacy hawks will grill Meta on data handling—who owns the transcriptions? But with blockchain's transparency toolkit, we could see decentralized AR layers where users control their gaze data via zero-knowledge proofs. Meme tokens could gamify it: earn $EYE for every translated tweet you share, or mint AR NFTs that only reveal themselves to verified holders.

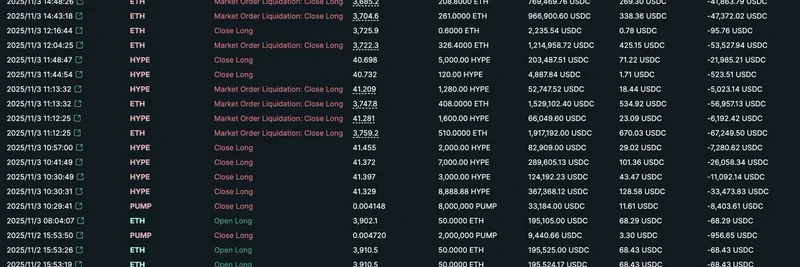

As the replies pour in—from liquidation nightmares (@blockscribbler) to small-talk slayers (@david_lee2085)—it's clear this tech's sparking fires. Meta's Ray-Ban update isn't just eyewear; it's a portal to a more connected, tokenized reality. What's your take? Will AR subtitles kill language apps, or birth the next meme coin craze? Drop your thoughts below—we're building the knowledge base one viral thread at a time.