Hey folks, if you're deep into the world of blockchain and meme tokens, you know how crucial data privacy is—especially when centralized systems can be a hacker's playground. A recent tweet has sparked quite the buzz in tech circles, highlighting potential spying risks in humanoid robots. Let's dive into this eye-opening story.

It all started with a post from AI policy expert Séb Krier on X (formerly Twitter), who pointed out a research paper claiming the Unitree G1 humanoid robot is secretly beaming sensor and system data back to servers in China. No heads-up to the owners, no opt-out button—just constant data flow. VaderResearch amplified this by quoting the tweet and emphasizing that robotics isn't just fun and games; it's a national security issue.

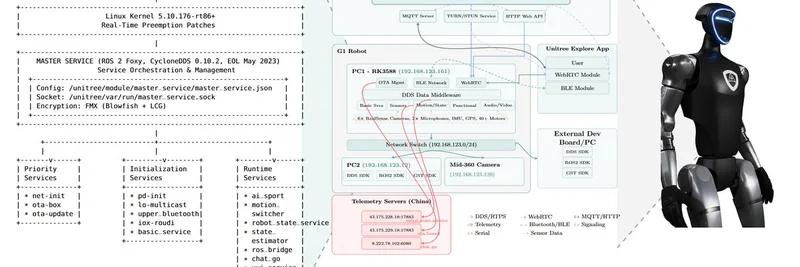

For those not familiar, the Unitree G1 is a cutting-edge humanoid robot designed for tasks like research, education, and even home use. It's got cameras, microphones, and all sorts of sensors to navigate and interact with the world. But according to the paper titled "Cybersecurity AI: Humanoid Robots as Attack Vectors," this bot is acting like a sneaky surveillance device.

The researchers uncovered that the G1 sends multi-modal data—think camera feeds, audio recordings, and sensor readings—to two specific IP addresses (43.175.228.18 and 43.175.229.18) every five minutes. These servers are reportedly located in China, and the transmission happens without any user knowledge or consent. This isn't just a privacy faux pas; it could violate regulations like the EU's GDPR, specifically Articles 6 and 13, which deal with lawful processing and transparency.

How did they find this out? The team exploited a vulnerability in the robot's Bluetooth Low Energy (BLE) provisioning protocol. It turns out Unitree uses hardcoded AES keys across all units, making it easy for anyone in the know to inject commands and gain root access. From there, they reverse-engineered the encryption and peeked into the data flows. Scary stuff, right?

Now, why should blockchain enthusiasts care? In the decentralized world, we're all about owning our data and resisting centralized control. This incident underscores the risks of proprietary hardware in emerging tech like AI and robotics. Imagine integrating such robots into Web3 projects—maybe for NFT galleries or meme token events—and unwittingly handing over sensitive info to foreign servers. It could compromise user privacy, intellectual property, or even smart contract interactions.

Moreover, as meme tokens often thrive on viral tech trends, this could spawn a wave of privacy-focused tokens or memes mocking "spy bots." We've seen similar reactions with data scandals before, like the Cambridge Analytica fallout boosting privacy coins.

The paper also warns that these robots could be hijacked for cyber attacks, turning them into trojan horses in critical infrastructure. For blockchain practitioners, this is a reminder to vet hardware thoroughly, especially if it's from manufacturers with opaque practices.

If you're curious, check out the original thread on X or dive into the full paper. In the meantime, let's keep pushing for transparent, decentralized solutions that put privacy first. What do you think—time to meme this into oblivion?