Hey folks, if you've been knee-deep in the AI hype like the rest of us at Meme Insider, you know all about those shiny Nvidia GPUs crunching numbers like there's no tomorrow. But what if I told you the real magic—the stuff that lets those GPUs talk to each other at lightning speed—is happening behind the scenes in the world of switches, cables, and routers? That's exactly what caught the eye of Mardo (Miko) Crypto, the savvy founder behind @DegenCapitalCIO and @Snib_AI, in his latest X post.

Miko's dropping some serious knowledge: while everyone's chasing the next big AI model, the networking infrastructure that scales these beasts is sucking up 15-20% of total AI capital expenditures (capex). And boy, is it paying off—stocks like $CRDO (Corning) and $AVGO (Broadcom) are hitting all-time highs faster than a meme coin pump.

The Unsung Heroes of AI Scale

Picture this: massive data centers packed with thousands of GPUs, all needing to share data without a hitch. That's where ethernet switches and high-speed interconnects come in. They're not glamorous, but without them, your AI training job would crawl slower than a sloth on sedatives. Miko nails it—networking is the glue holding the AI revolution together.

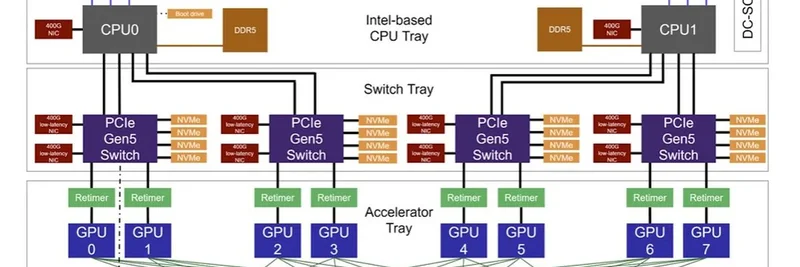

To illustrate, he shared this killer diagram from Meta's engineering blog on Grand Teton, their open-source GPU hardware platform designed for monster AI workloads.

This bad boy maps out the "Grand Teton" setup—a beastly integrated chassis that packs CPUs, GPUs, NVMe storage, DDR5 memory, and a web of connections. We're talking PCIe Gen5 switches for low-latency zips between components, GPU fabrics for seamless accelerator chatter, and even retimers to keep signals crisp over long hauls. Compared to older systems like Zion, Grand Teton cranks up host-to-GPU bandwidth by 4x and doubles the compute power envelope. It's open-sourced via the Open Compute Project, so tech wizards everywhere can tweak and deploy it.

Meta built this for training zettaflop-scale models (that's a billion billion floating-point operations per second, for the uninitiated), powering everything from recommendation engines to metaverse dreams. And as AI gets hungrier, deployments like this are exploding—hello, trillion-dollar data center builds.

Miko's AI Watchlist: Where to Park Your Bets

Miko didn't stop at the tech talk; he laid out a sharp watchlist for anyone eyeing AI's infrastructure gold rush:

- GPUs: $NVDA – The kingpin, no brainer.

- AI Apps: $GOOG and $MSFT – Where the software magic happens.

- Data Centers: $ORCL, $GLXY, $HUT – The real estate for all this compute.

- Networking: $CRDO, $AVGO – The picks lighting up charts right now.

- Development: $STRL – Building the actual facilities.

If you're a blockchain practitioner dipping toes into traditional markets, this lineup screams opportunity. Miko's thesis? AI's infrastructure binge and app-building frenzy will mint $10+ trillion market cap behemoths in the coming years.

Smarter Plays Than Meme Coin Volatility?

Now, here's where it hits home for us at Meme Insider. Crypto's been a rollercoaster—meme tokens like $DOGE or $PEPE can 10x overnight, but that Sharpe ratio (risk-adjusted returns, for the newbies) is trash. Miko's spot on: for similar moonshot upside without the gut-wrenching dips, AI stocks offer a steadier climb. Think of it as farming yield on a blue-chip DeFi protocol versus YOLOing into a rug-pull-prone shitcoin.

As blockchain evolves with AI integrations—like decentralized compute networks or NFT-gated AI tools—this crossover investing makes total sense. Keep an eye on how firms like Hut 8 ($HUT) bridge crypto mining with AI data centers; it's the future.

Diving deeper? Hit up Miko's thread and that Grand Teton deep dive. What's your take—networking the next big AI narrative, or still all about the GPUs? Drop your thoughts in the comments, and stay tuned for more at Meme Insider where we unpack the wild world of tokens, tech, and trends.