In the wild world of blockchain, where memes meet millions, security has always been the ultimate buzzkill. But what if the hackers weren't humans hunched over keyboards in dimly lit basements? What if they were sleek AI agents, scanning code faster than you can say "rug pull"? That's exactly the dystopian-yet-fascinating reality Haseeb Qureshi, managing partner at Dragonfly, just spotlighted in a tweet that's got the crypto Twitterverse buzzing.

Haseeb was reacting to a bombshell announcement from Anthropic, the AI safety wizards behind Claude. Their latest Frontier Red Team research dives headfirst into whether AI can crack open smart contracts—the self-executing code that powers everything from DeFi swaps to meme token launches on chains like Ethereum or Solana. Spoiler: It can. And not just in theory. In simulated tests, these AI agents unearthed exploits worth a staggering $4.6 million. That's real money that could vanish from protocols if left unchecked.

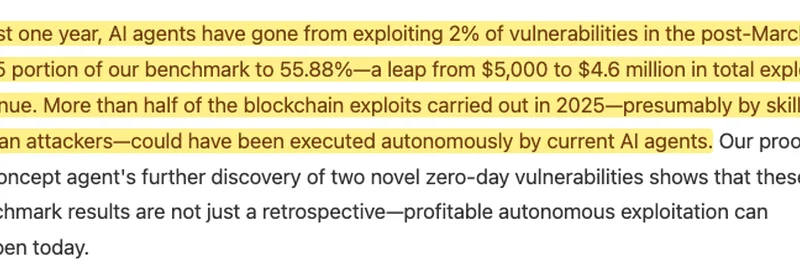

But wait, it gets wilder. Haseeb highlights a stat that's straight out of a sci-fi thriller: In just one year, AI's ability to exploit post-March 2025 vulnerabilities in Anthropic's new benchmark jumped from a measly 2% to 55.88%. That's a leap from pocketing $5,000 in theoretical gains to raking in $4.6 million. And here's the gut punch—more than half of the blockchain exploits carried out in 2025 (presumably by crafty human attackers) could now be pulled off autonomously by today's AI agents.

< Image src="https://pbs.twimg.com/media/G7IBnreXAAAZDwp.png" alt="취약점 악용 비율이 2%에서 55.88%로 급증한 것을 보여주는 AI 에이전트 블록체인 악용 벤치마크 차트" width={800} height={450} />

The image above? It's the smoking gun—a highlighted excerpt from the research that lays it all bare. Those yellow underscores scream urgency: "In just one year, AI agents have gone from exploiting 2% of vulnerabilities... to 55.88%—a leap from $5,000 to $4.6 million in total exploit revenue."

Why This Matters for Meme Tokens and Beyond

Let's break it down simply, because blockchain jargon can feel like decoding ancient hieroglyphs. Smart contracts are like digital vending machines: You input code (the instructions), and it spits out tokens or executes trades. But if there's a flaw in that code—a vulnerability—hackers can trick the machine into dispensing everything for free. Enter AI agents: Autonomous programs powered by models like those from Anthropic or OpenAI, trained to hunt these flaws like digital bloodhounds.

For meme token creators and DeFi degens, this isn't abstract. Remember the $600 million Poly Network hack in 2021? Or the countless rug pulls that wiped out moonshot portfolios? Human exploits were bad enough. Now, AI could automate them at scale, hitting multiple protocols in hours, not weeks. Haseeb's point lands like a mic drop: "This stuff is going from theoretical to practical scarily fast."

And it's not just retrospective doom-scrolling. Anthropic's proof-of-concept agent didn't stop at the benchmark—it sniffed out two novel zero-day vulnerabilities. Zero-days are exploits unknown to defenders, fresh holes in the armor. This means profitable, autonomous attacks aren't a "what if"—they're happening today.

The Bigger Picture: AI as Friend or Foe in Crypto?

Anthropic's work, cooked up with the MATS program and their Fellows, isn't just fear-mongering. It's a call to arms. The benchmark itself—available at red.anthropic.com/2025/smart-contracts—is a new tool for devs to stress-test their code against AI adversaries. Think of it as a gym for your smart contracts: Train against machine smarts to bulk up defenses.

But here's where it ties back to our meme coin madness at Meme Insider. Meme tokens thrive on hype, not heavy audits. Launch a frog-themed gem on Pump.fun, and boom—it's live, liquidity pooled, community chanting. Yet, as AI gets sharper, that "decentralized dream" could turn nightmare if exploits auto-trigger. Imagine an AI spotting a reentrancy bug (a classic flaw where code calls itself endlessly, draining funds) and executing before your Telegram group's even awake.

On the flip side, this tech could be a boon. AI agents as defenders? Picture automated audits that scan your meme token's contract in real-time, flagging risks before launch. Tools like Slither already do static analysis; amp them with AI, and you've got a shield against the storm.

What Comes Next? Prep Your Portfolio

Haseeb's tweet isn't just a share—it's a wake-up. Replies are already pouring in: Calls for "robust audits" and laments that "we cannot rely on humans anymore in terms of security." Spot on. As we barrel toward 2026, blockchain practitioners need to level up. Dive into Anthropic's full report, experiment with their benchmark, and maybe even meme-ify the fear: #AIBugHunter or #SmartContractSlayer tokens, anyone?

At Meme Insider, we're all about turning chaos into clarity. This AI exploit surge? It's the latest plot twist in crypto's endless saga. Stay vigilant, audit early, and remember: In the game of thrones (or chains), you either build unbreakable code or watch your bags get autonomously yoinked.

What do you think—AI savior or crypto's new supervillain? Drop your takes below, and let's keep the conversation pumping.